The static type system of Sparkground

For my undergraduate computer science project course

Features

Base types

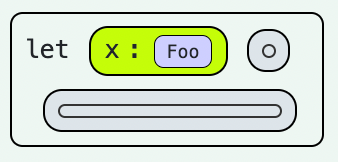

Sparkground has an infinite family of base types, each written as a symbol where a type is expected. For instance, in (let (((x Foo) ·)) ·), the binding x is annotated with base type Foo.

Some base types have special built-in behaviour.

- Values of the following base types are created by literal syntax:

BooleanIntegerNumberStringSymbol

- Top and bottom types (see subtyping):

Anyis the top typeNeveris the bottom type

- Gradual typing:

?is the “unknown” type

- Produced and consumed by built-in functions:

Empty(empty list, used as a “null” type)Graphic(describes something that can be drawn to the screen)

Type constructors

Sparkground supports type constructors, which are (uninterpreted) functions that operate on types to construct new types. Like with base types, any symbol written where a type constructor is expected (such as (type (Here ·)), where type is syntax that denotes a type expression 1) will be treated as one. In practice, all useful type constructors are built-in, as there is currently no pleasant interface for users to define their own.

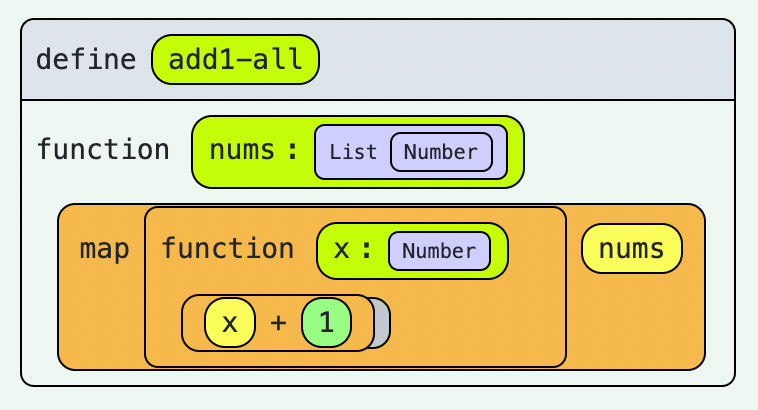

List is a type constructor that takes one type parameter—say X—where (List X) denotes lists of objects of type X. Lists are core to the language and can be created with literal syntax. (In fact, like Scheme, expressions are syntactically just nested lists.) Note that at runtime, lists may be hetergeneous, such as (1 two "three"). The best static type for heterogeneous lists in the current system is (List J), where J is the join () of the types in the list. As the current system does not support union types, J usually ends up as Any, the top type. (But see type join and meet for the complete definition.)

Other built-in type constructors relate to function types:

-

is the type of (fixed-arity) functions from to .

The type constructor

Functiontakes an arbitrary positive number of type parameters—conceptually, it is overloaded with the infinite overload set , where is the type of functions with arity . -

is the type of variadic functions from , and zero or more , to .

Like

Function,Function*is conceptually overloaded, with the overload set .The last argument type is treated as optional and infinitely repeatable (like a Kleene star). More concretely, is modeled by the infinite intersection type .

For example:

string-repeathas type(Function String Integer String), which pretty-prints as(String Integer → String)string-concatenatehas type(Function* String String), which pretty-prints as(String... → String).

Parametric polymorphism

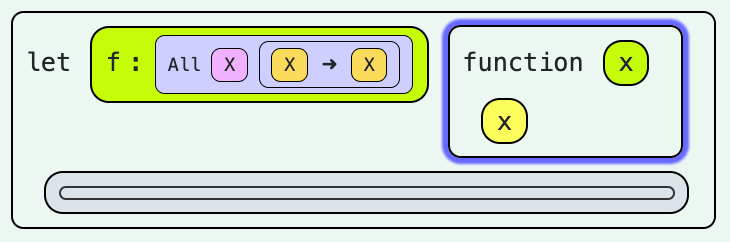

Parametric polymorphism is the ability to endow an expression with an infinite family of types by allowing type constructor parameters to vary by usage. For example, is the type of values that, for every possible substitution of , can be treated as a function from to . Such types are polymorphic, generic, or universally quantified. Values of this type include and .

In Sparkground, the special syntax names a universally quantified type , which can refer to the bound type parameters . For example, the built-in cons function has type (All (#E) (Function #E (List #E) (List #E))).

Sparkground has impredicative polymorphism: it admits types in contravariant position, and type variables can be instantiated to types.

Subtyping

Subtyping rules define which types can be correctly assigned to each other. When ( is a subtype of ), every expression of type can also be treated as having type ; hence, assigning an expression of type to an annotated binding of type is allowed.

The subtyping relation is reflexive. To ensure this for certain simple cases, we start with the following two rules:

- for every base type

- for every type variable

Next, we add rules that introduce top and bottom types. is the top type, so everything can be assigned to it:

- for every type

is the bottom type, so it can be assigned to anything:

- for every type

Expressions typeable as will never produce a value, hence the name.

Subtyping rules that relate types with different tags are referred to as nominal. Built-in nominal rules are:

- for every type

The rest of the subtyping rules are structural, relating types built from the same type constructors. To enable this, each type constructor has an associated variance signature, such as . In general, if is given parameters as a type constructor, parameter of has variance , corresponding to covariance, contravariance, and invariance, respectively.

Some built-in type constructors have variance signatures:

All other type constructors are invariant in every position. (This could be amended in the future for user-defined type constructors.)

With type parameter variance in place, the schema for structural subtyping rules is:

- if for all

can be read as and are compatible according to the variance sign . It is the smallest relation such that:

- if [covariant];

- if [contravariant];

- if [invariant].

For example, , since:

- Both are unary function types, built from type constructor ;

- is contravariant in its parameter type and covariant in its return type, i.e.,

- , so , and ;

- , so , and .

Finally, universally quantified types are related when they bind the same variables and their body types are related:

- if .

This rule alone is sound, but does not admit much flexibility. An assignment of to a binding of type fails to typecheck, because cannot be polymorphically generalized. This is a known deficiency. Fixing it, with the new syntax, UI, and typing rules that entails, falls under future work. (Parametric polymorphism exists in Sparkground primarily to ease working with built-in functions like map and list, which does work with the current rules.)

Gradual typing

Gradual typing is the term of art for optional static typechecking with strong guarantees for fully annotated programs. 2

In a gradually typed language, at each point where the type inference algorithm requires a manual annotation, programmers may choose instead to leave parts of their programs statically untyped. In effect, this disables the static typechecker in these parts of the program. But importantly, when programmers choose to fully annotate their programs, gradual type systems provide exactly the same soundness properties as their non-gradual counterparts.

To implement gradual typing, we define a consistent subtyping relation , which says that (” is a consistent subtype of ”) iff and are related by the subtyping relation where they are both known. [3 § 3–4] That is, the consistent subtyping rules are precisely the subtyping rules with "" replaced by "", plus:

- for every type

- for every type

Despite the name “consistent subtyping”, this is a weakening of the usual subtyping relation. The name comes from the “type consistency” relation , used to define gradual typing without subtyping. [3 § 3]

Whenever no subtyping rules apply (other than reflexivity), agrees with , which is symmetric:

- — right side has unknown structure, OK;

- — matching outer structure, , and , OK;

- — matching outer structure, , and , OK;

- — mismatched outer structure, error;

- — matching outer structure, but , error.

When subtyping rules do apply, is not symmetric:

- — matching outer structure, , and return type has unknown structure, OK;

- — matching outer structure, , error;

- — matching outer structure, , and parameter type has unknown structure, OK;

- — matching outer structure, , and parameter type has unknown structure, error.

Note that is also not transitive: and , but . From this perspective, it is clear how any program can be made to typecheck in a gradual system—by smuggling expressions from one side to the other through .

agrees with when no s appear on either side (in which case “where they are both known” is everywhere). This is why gradual type systems provide the same type safety guarantees as their non-gradual counterparts when no bindings have the type .

Local type inference

Local type inference is a family of syntax-directed type inference algorithms that are incomplete, but work in the presence of subtyping and impedicative polymorphism. 4 In this context, “local” means that expression types are inferred according to the types of neighboring expressions in the syntax tree. Constraints are generated and solved once per node, in bottom-up or top-down order, starting from where type information is available. (If insufficient type information is available, users may be required to provide some type annotations, so that there is somewhere to start.) Types inferred bottom-up are called synthesized, while those inferred top-down are called checked or inherited. 5 Local inference algorithms are used industrially, in languages such as Java, Scala, Swift and TypeScript. They are also used for Typed Racket.

Polymorphic type argument inference

Sparkground implements synthesis mode. This provides a typechecking mechanism typical of languages with subtyping; but it also provides type argument inference for polymorphic function applications, provided that types for the function call’s arguments are available.

When the type checker reaches a function application node in the syntax tree, it synthesizes the node’s type as follows:

- The type of

fis synthesized. - The number of arguments () is checked against the arity of

f, which may be variadic. - The type of each argument is synthesized.

- A constraint system is generated with the known argument types as lower bounds on

f’s parameter types. [4 § 3.2–3.3] - A solution to the constraint system is computed. This will be a minimal solution for the return type

Roff, whenever such a solution exists. If no solution is available, a typechecking error is emitted. Ris instantiated with type arguments according to the constraint system’s solution. This yields the synthesized type for .

Each constraint system consists of subtype constraints and untyped constraints.

Untyped constraints arise when a type with free type variable #X is computed to be bounded above or below by ?. In these cases, the constraint “#X is untyped” enforces that is the only correct solution—in effect, #X has been “poisoned” by its untyped context, and forced to also become untyped. This is how gradual typing disables the typechecker in a cascading way. Untyped constraints are straightforward, so we will ignore them from here on.

Subtype constraints look like "", expressing that L must be assignable to #X, and #X must be assignable to U. One-sided constraints can be expressed by setting or .

A solution to the constraint system is a substitution such that for all . Note that trivial solutions such as are “correct”, but not useful; therefore, the constraint solver treats ? as a formal base type, to keep ? usage in computed solutions to a minimum.

A minimal solution to for (return type) R is an -solution such that for every possible -solution . Not every pair has a minimal solution—in particular, when R is invariant in a type variable with more than one admissible value. For example, let , and note that only if , and thus only if . So whenever multiple (nontrivial) solutions are admissible, none of them are minimal. (If , there is exactly one nontrivial solution; otherwise there are multiple.) [4 §3.5]

The existence of pairs with no minimal solution is a well-known problem, with an associated design tradeoff: should the inference of R succeed in such cases (with a solution that may not be minimal), or should it fail? 6

Different languages solve this problem in different ways. Typed Racket allows these inferences to succeed, using some appropriate heuristic for whether to resolve R up or down—and it happens to infer the type (-> Any Any) for the return value (λ (x) x). Meanwhile, Scala lets it fail: when given def xToX = x => x, the typechecker complains that it “could not infer type for parameter x of anonymous function”. Proponents of the latter behaviour argue that inference should only succeed when the type it finds is no more general than one the user could have given with a manual annotation. [6 § 4.1]

Due to a combination of factors…

- Sparkground is geared toward novices;

- type annotations take up a significant amount of space in the block-based UI;

- adding type annotations in the block-based UI can be a tedious process

…Sparkground works more like Typed Racket than Scala here: whenever R is invariant in a type variable, the variable is resolved to its lower bound. To reiterate, this is a valid solution to the constraint system; at worst, inference chooses an undesirable (but still correct) type for some terms. From empirical observations, such a problem appears rare enough to be acceptable.

Function type synthesis

The type of each lambda abstraction is synthesized as follows:

- The current type context is augmented, with the parameters bound to their annotated types (where when has no type annotation).

- The type

Bofbodyis synthesized under the new type context. - The overall synthesized type is computed as .

Type join and meet

There are two binary operations on types, join and meet . They give us a lattice of types corresponding to the subtyping order—except when ?s are present, which force the result to be ?. These operations are called upon by the constraint generator to combine the lower and upper bounds of multiple subtype constraints. Lower bounds are combined with join and upper bounds with meet . In the final constraint system, each variable will have at most one subtype constraint.

Type join is defined as:

Type meet is the dual:

Note that incomparable types and created from the same type constructor join elementwise by , and meet elementwise by . This reads as the combination of types and with respect to variance (resp. ). The intent is to preserve the direction (join/meet, up/down) when the parameter is covariant, and flip the direction when the parameter is contravariant. Formally,

is not defined—if a type constructor is invariant in any parameter, its types do not combine elementwise.

Notes and references

Footnotes

-

typeexpressions ensure that type blocks remain rendered as types by the editor while not in annotation context. They are not currently useful for any other purpose; evaluating one throws an error. ↩ -

J. Siek, “What is Gradual Typing” (2014): https://wphomes.soic.indiana.edu/jsiek/what-is-gradual-typing/ ↩

-

J. Siek and W. Taha, “Gradual Typing for Objects” (2007), European Conference on Object-Oriented Programming: https://doi.org/10.1007%2F978-3-540-73589-2_2 ↩ ↩2

-

B. Pierce and D. Turner, “Local Type Inference” (1999, 2000), ACM Transactions on Programming Languages and Systems, Vol. 22, No. 1: https://doi.org/10.1145/345099.345100 ↩ ↩2 ↩3

-

M. Odersky, C. Zenger and M. Zenger, “Colored Local Type Inference” (2001), ACM POPL ‘01: https://doi.org/10.1145/360204.360207 ↩

-

H. Hosoya and B. Pierce, “How Good is Local Type Inference?” (1999): https://doi.org/20.500.14332/7082 ↩ ↩2